| Uploader: | Quandrab |

| Date Added: | 02.03.2019 |

| File Size: | 61.16 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 23954 |

| Price: | Free* [*Free Regsitration Required] |

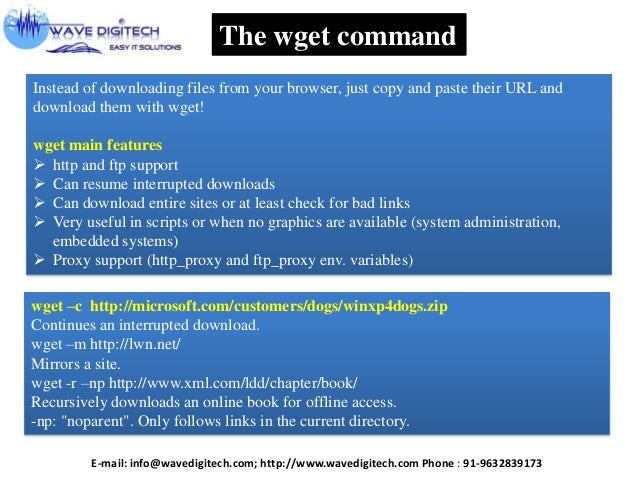

bash - How to download files without full URL? - Unix & Linux Stack Exchange

In the example of curl, the author apparently believes that it's important to tell the user the progress of the download. For a very small file, that status display is not terribly helpful. Let's try it with a bigger file (this is the baby names file from the Social Security Administration) . I was always wondering how to download the files through the Linux shell (I have wget, curl) that do not have a full URL of the file to be downloaded, but the full URL is passed e.g. to the browser only when specific URL is visited. However, when I try downloading it through Linux shell (with either wget or curl), all I get is an HTML file. Apr 08, · A shell script to download a URL (and test website speed) GoDaddy website downtime and (b) GoDaddy 4GH performance, I wrote a Unix shell script to download a sample web page from my website. To that end, I created the following shell script, and then ran it from my Mac every two minutes: Use grep to get all lines from the file download.

Unix download file from url

By using our site, you acknowledge that you have read and understand our Cookie PolicyPrivacy Policyand our Terms unix download file from url Service. Super User is a question and answer site for computer enthusiasts and power users. It only takes a minute to sign up.

Is there a unix command I can use to pull a file from a URL and put it into a directory of my choice? So I have a URL which if you go to it, a file will be downloaded. I want to be able to type a unix command to download the linked file from the URL I specify and place it into a directory of my choice.

So if the URL www. I want to type a unix command to unix download file from url the image. Use this to provide a username and password. Sign up to join this community. The best answers are voted up and rise to the top.

Home Questions Tags Users Unanswered. Asked 8 years, 9 months ago. Active 1 year, 11 months ago. Viewed 26k times. Corey Corey. I've tried curl and wget as suggested; however, I do not believe the file is finishing downloading. The file I am attempting to download is a. Both curl and wget provide progress indication for downloads.

And I've also got an error when trying wget Connecting to www. HTTP request sent, awaiting response No data received. I suspect the freewarelovers site has set things up such that you cannot directly download the resource using the URL you provided. Matthias Ostrowski Matthias Ostrowski 11 2 2 bronze badges.

Sign up or log in Sign up using Google. Sign up using Facebook. Sign up using Email and Password. Post as a guest Name. Email Required, but never shown. The Overflow Blog. Scaling your VPN overnight. Featured on Meta. The Q1 Community Roadmap is on the Blog, unix download file from url. Community and Moderator guidelines for escalating issues via new response…. Related Hot Network Questions, unix download file from url.

Question feed. Super User works best with JavaScript enabled.

Use wget to download / scrape a full website

, time: 14:35Unix download file from url

Nov 23, · I know how to use wget command to grab files. But, how do you download file using curl command line under a Linux / Mac OS X / BSD or Unix-like operating systems? GNU wget is a free utility for non-interactive download of files from the Web. curl is another tool to transfer data from or to a server, using one of the supported protocols such as HTTP, HTTPS, FTP, FTPS, SCP, SFTP, TFTP, . May 12, · How to download files in Linux from command line with dynamic url. May 12, Introduction. wget and curl, are great Linux operating system commands to download blogger.com you may face problems when all you have is a dynamic url. Stack Exchange Network. Stack Exchange network consists of Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.. Visit Stack Exchange.

No comments:

Post a Comment